Flow: Systemic Predictability Comes From Eliminating Feedback Loops

TLDR

I want to get to writing about how capacity of teams works with rates on input and throughput - but to get to that I think the previous 2 posts need summarising in a way that can be taken as a simple call to action.

The summary of these posts is that if you want to go fast and have quality and do surprising things then

- we need to employ cross-role ensemble teams. These are people working together side-by-side or on same call (this eliminates the feedback loops)

- with multiple people working on the same thing at the same time.

- Focus on the measurement of and then reduction of gross lead time

The reason for gross lead time is the observation that the vast majority of time is spent within loops in the system - far more than half, potentially up to 90%. If we make this visible. This will result in some people not being busy all the time for the value of the lead time (and so work on internal feedback loops) plummeting.

These posts contain the simulations that demonstrate (what I think is) the bare minimum advantage you get from employing this thinking.

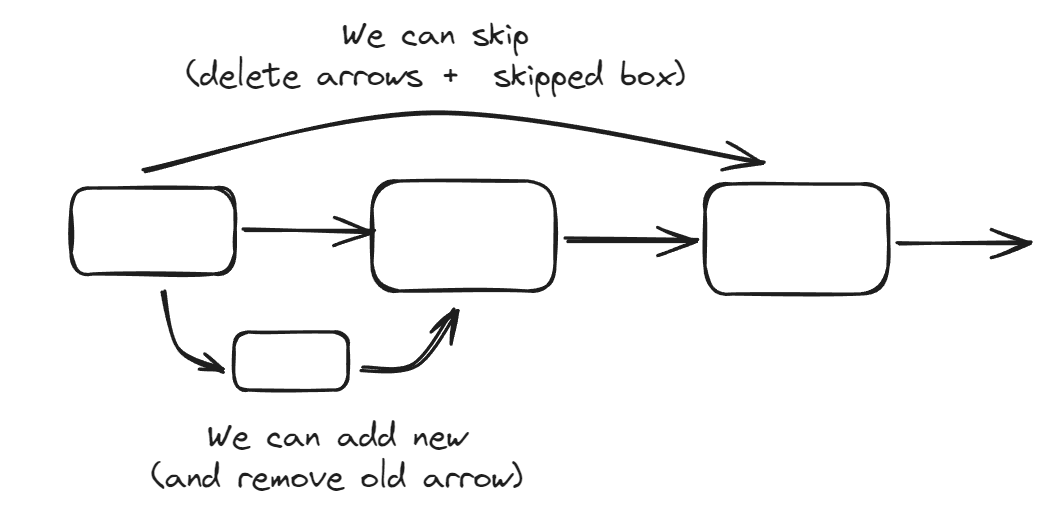

Moves in the diagram

As we are considering a single piece of work we are ignoring complexities of split and merge. Essentially we have 2 moves - we can combine pieces or we can split them up.

When we split them up we introduce implicit feedback loops to previous stages - my previous article was really about identifying the effect these have (terrifying) and talking about how our ways of work actually hide these.

But we don’t naturally consider combining stages of a process because we confuse the simplicity of having clearly defined roles with system simplicity. These 2 things actually work against each other dramatically in a multiplicative way.

Our job is producing predictable (ie low variance) systems

Just because something is written down in a simple broken up way to understand to not translate in any way to what the resulting system complexity of working in that way. I would argue that it is negative and multiplicative.

From a system complexity and duration variance perspective (our primary risk on projects is cost base which is linear with lead time) it is complete insanity to introduce new steps without first combining steps because of the dramatic impact of implicit feedback loops.

In the simplest case these loops doubled the estimates but also added variance between 2x and 20x estimate to the work. Why would we choose this by adding columns to a board just to keep a scrum master busy? Do we really understand the cost of splitting things?

Predictability, frameworks, expectation and surprise

This discussed the power of frameworks that make predictions, measurement against those predictions in order to experience surprise in a way that enables you to identify constraints.

Through exercising deliberate practices and measuring their success together we can find collections that work well together.

This is set against a backdrop of an industry that uses the same frameworks over and over, sees the same results and redefines success as being the output of those frameworks … late, cut scope and over budget. Its just normal, for me this is failure.

It really doesn’t have to be this way. But getting there requires medicine against all the snake oil. It ends with an appeal to ‘lets get good’ - lets find surprise and play again.

My most efficient team had a board that had 2 columns, doing and done. When we needed another item for doing we would all stop work and figure out what it would be. We went faster than any other team I have been on. We built companies in a few weeks, this is not only possible, its simple and results in far better mental health and relationships.

Our intuition is terrible

This post was about delivery and variance in delivery that is a necessary result of project structures. The important thing here is that I have never met anyone in delivery or product who seem to be aware of this.

Here we introduce the idea that multiplication is not intuitive. We attempt to reduce multiplication to addition which is intuitive, we do this by converting it into a series of additions - which we can intuit.

We are very bad at intuiting multiplicative systems - compound interest, growth, cost of delay, epidemics, the area of a pizza relative to its diameter (how is a 14" pizza essentially 2x12" pizzas? - but with far more topping)

This matters because this is how every feedback loop effects the gross lead time of systems.

If every estimate is accurate and only 1 (50% failure) feedback loop exists at the end, we can say on average the work will take 2* as long but also half the time it will take between twice as long and 20 times as long. This is a truth from over 1,000,000 experiments.

This is not because the estimates are bad or anybody is working badly - it is because that is how that system works. It is a structural problem which once seen cannot be unseen.

If the failure in that system is moved to the front rather than the back then the story is dramatically different … the mean is 1.5x but the max reduces to 10x. This movement is critical and transformative when we consider this with multiple work items.

It is the reduction of time of the long tail that makes the impact - but the time that these tasks take is not a time that we can measure because we use delivery and product practices that make it next to impossible to measure.

If we fixed this then this problem would scare people enough that we would naturally invent new better practices! But we don’t, we use Jira ….

What our terrible intuition makes us do

We break work into groups of tickets and track those tickets but we invent side processes for defects and define them as not being part of the work. We make it really hard for ourselves to have visibility of the part of the system that carries the most risk - this can only be because systemically we do not know this is true. The only thing that matters is measuring gross lead time from inception all the way to arriving with a customer.

These tickets are the same as addition, they are sequential things that when added together produce the result we want. But we have feedback loops … so whilst we may get the result with this way of modelling we can never say when. Whats worse is we Add estimates that don’t account for the systemic multiplicative properties. The variance of all these things adds up, it doesn’t cancel out.

IE Our ways of working, our ‘best practises’, cause us to not be able to see the terrifying truth which is even if everything goes ok, if the system is constructed with defects being found at the end we have the worst possible system for producing predictable outcomes with minimal variance.

How to fix this? Convert failure later into collaboration now

This is why my 8 person team beats you 40 person team every time and the story only gets worse with more feedback loops.

Essentially shift left is the answer, but in terms of these diagrams it is not just about taking sequential jobs and making them parallel.

It is about taking the feedback loop and turning it into a lived, present experience between 2 experts collaborating on and directly communicating to get to the outcome in whatever time it takes - because that process has no feedback loop in term of sequence on a diagram and so is almost guaranteed to have the properties of an additive system rather than the multiplicative system.

We go from 3 things where one fails into 2 things where nothing fails. The system returns to having the property of addition and takes 300 time as we estimated. This is true because in this model we had no feedback loop from dev which would imply requirements fail - the gain in this scenario is actually greater.

The cost and value of this - as an industry we must not know this - we wouldn’t choose this.

The implications of this are that the benefits of this logic in more complex systems are many orders of magnitude more impact.

We move from work taking 1-2 times the estimate half the time, but the other half of the time it takes between 2-20 times into a system where work takes as long as estimated.

IE

- it takes 300 time + all the realities of the problem that make it worse

- vs best case 300-600 time, but half the time that is between 600 and 60000 times

so one process takes 300, the other takes between 300-60000

When we say ‘this is a straw man, in the real world there is a failure here also’ you are just increasing the impact of this kind of change - that system has another order of magnitude of benefit to be realised.

People think there is a choice about how to do things, presented with these numbers I don’t see a choice. I see an industry who takes an option but has no idea that other possible worlds exist because why would you choose that variance?

The later scenario is not predictable - when you add in the mitigating real factors to make the simple scenario more realistic consider that there are all going to be multiplied in the feedback loop scenario.

Therefor we should be striving to eliminate feedback loops

what this means is that we do not want to add any steps to a delivery process unless we can gain tremendous value because by creating a single step we double all the estimates BEST case. Then when you add another step to that system … you are probably doubling again … we can simulate this if people want.