Complexity Kills Projects - But You *Can* Measure It

Complexity kills projects

It causes everything to stop as more and more energy has to go into changing the existing system rather than producing the desired work done.

There is a wonderful equation in physics: Work Done is Force times Distance.

If you fail to move something over a distance, then you have not done any meaningful work. Think about that next time you are holding a weight - it doesn’t mean you are not expending energy. It just means that energy hasn’t gone into moving a thing to its next place.

This is what happens in businesses that cannot keep up - tremendous effort is expended, but, it, just, can’t, quite, get, there.

This maps onto what we see, we invest in things but we see no results. There is no work being done - the organisation is essentially engaged in some exercise trying to get a great weight to budge.

Complexity is what causes the inertia.

If we measured Gross Lead Time of work we would have a nice large global indicator that tells us how our complexity is growing.

What is complexity?

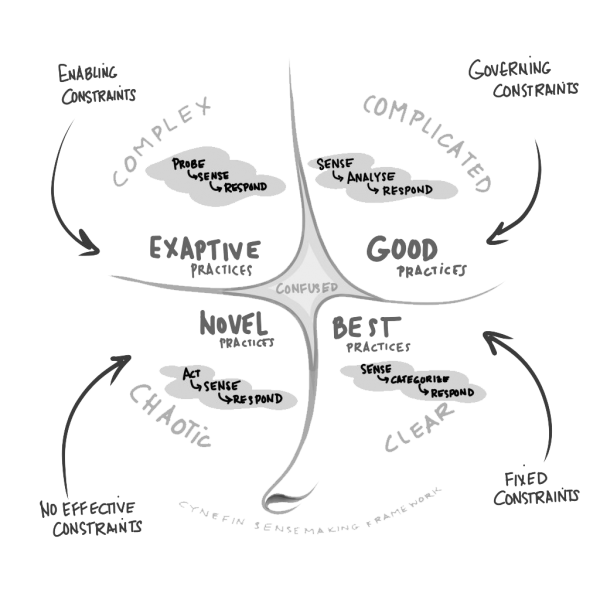

Cyefin tells us that a complex system is one where we do not fully understand the constraints.

|This image was taken from the above page on cynefin. It belongs to Cognitive Edge Ltd

Surprise

The symptom of this is that we try to do things and we get ‘surprised’ - because what we understood turned out to be false, so something unexpected happened. Two things came together that we hadn’t anticipated to produce a behavior we didn’t expect - this is the basis for exaption and learning new things.

Surprise - measurable

This means that we can also measure it. The way we fail is important, maybe we violated a governing constraint (complicated and simple rules) but maybe we also found something new - which can enable us to do something new.

Complecting things together

Another definition of complexity comes from dictionaries which concerns itself with tying things together.

Oxford Learners Dictionary: ‘made of many different things or parts that are connected; difficult to understand’

Rich Hickey talks a lot on complexity and simplicity

He says ‘Simple, comes from sim-plex - one fold’ This maps wonderfully onto Cynefin - but the way both use complexity is very different.

Complecting - Measurable

We can measure how tied together things are by simply looking at a change and counting the systems that need to change to implement it.

When this number exceeds 2 then we are interested.

Imagine a requirement to send an email when something happens. There should be 2 pieces of work

- Exposing the event of the something that happens

- some work to implement the email being sent when that event happens

when we involve more systems and teams then we know something interesting is happening and we have a candidate for redesigning our team structures.

Predictability

Some systems are unpredictable by their very nature.

When we combine systems that exhibit surprise naturally - because people are the actors - with badly coupled systems then that surprise can move into other systems quite far away. This vast undermines confidence in changing parts of a system and leads to full system testing.

We know that can cannot both know the position and velocity of particles, we know that if we have 3 bodies then the state in the future when they interact is highly unpredictable. We know that if we tell kids to do something they will do the exact opposite.

These system all have the problem of even if we knew the rules they use to decide action we still do not have access to the starting state with sufficient accuracy.

We have systems that involve literally hundreds of bodies (projects). We also have systems of individuals that are also highly complex and unpredictable. People do not do things as we expect.

But other system are well understood - we know that if we want boiling water we can heat water and (provided we have sufficient atmospheric pressure) it will eventually boil. Likewise models for customer support or accounting are well understood, formalised and commoditised.

If we know what kind of system we are dealing with we can partition and behave very differently in different places. We will come back to this with contracts

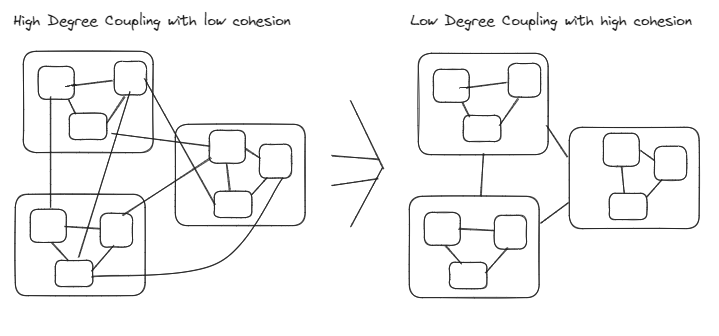

Coupling + Cohesion:

Coupling is what delivers value - but too much in the wrong ways is toxic. When we build something we are putting 2 things that can work together to achieve an and next to each other. We do not want to tie them together and make them one thing, we hold them apart and make them talk via their connectors.

Rich Hickey is actually talking about complexity and simplicity - but there is a huge overlap.

Getting this wrong means that we have to consider more and more of the system for every change we make. When people talk about ‘cognitive load’ of people and teams as a guide to sizing it is Rich Hickey that I see talking about this first back in 2011 (although Cockburn does predate him with Crystal Methods) in software, Demming also and systems thinkers also talk about this generally.

Bad coupling and cohesion would be the same as different organisms that share internal organs. Instead of things being connected by their externalities things become cross linked by their innards. In nature when this happens the organisms do not live for long naturally - and so this kind of coupling and cohesion doesn’t exist for long. However we do not have these selection processes - except for the company failing, but thats too late!

This means that when we change one organism we induce change in the connected organism. This is really bad, usually compounded as in systems where this happens once it will happen with many organisms so there is a chain reaction.

In nature organisms die, but in our world when we evolve into a bad state the business dies and nobody in other businesses finds out why. This lets us repeat the same mistakes over and over as a population with very little corrective feedback.

But we can also measure coupling + cohesion over time Adam Tornhill does this in code as a crimescene and built CodeScene as a result. It turns out because we have a record of how code changes over time we can use this to not only analyse code to refactor it but we can also use this to analyse team structures, evaluate architectural fit and so also indirectly evaluate delivery architecture. Will come back to this in another post.

Coupling and cohesion can be meaured and tracked and can tell us when our design is suffering from complexity.

A simple heuristic is this: When a change is made to a system how many parts of the system have to change? If it more than 2 then something potentially interesting is happening.

Coupling is when 2 different systems have a dependency of state across them.

We can decouple them by defining contracts of communication around behaviors instead of directly depending upon one anthers state. Through this contract we gain boundaries which we couple to instead of the innards.

Predictability

Some systems are highly sensitive to initial state. 3 body physics for example. But systems that also depend on people exhibit this behavior.

This matters because we can use coupling and cohesion to partition systems. If we know some parts of a system involve customers or growth we can draw boxes around these to contain the complexity so that other systems can remain simple + complicated. We cannot remove this kind of complexity.

As we can measure surprise and then analyse the cause of the surprise we can also evaluate how well our partitioning is working.

Complexity Conclusion

Complexity is inherent, however it can be contained and even exploited.

We can measure surprise and track what systems change when we make a change to get great insight into how well our designs are working for us.

I am constantly surprised at how little measurement of these easy to measure things is done by the industry. I know I have not fully invested into it either!

Its the absolute lack of any dialogue around whether the designs of our delivery and product structures (this happens a bit in software architecture but nowhere near enough either) are fit for purpose - I think its because we haven’t sufficiently defined it.

The cost of not investing in this is that the inertia from the complexity makes the investment required to get work done to be potentially exponential.